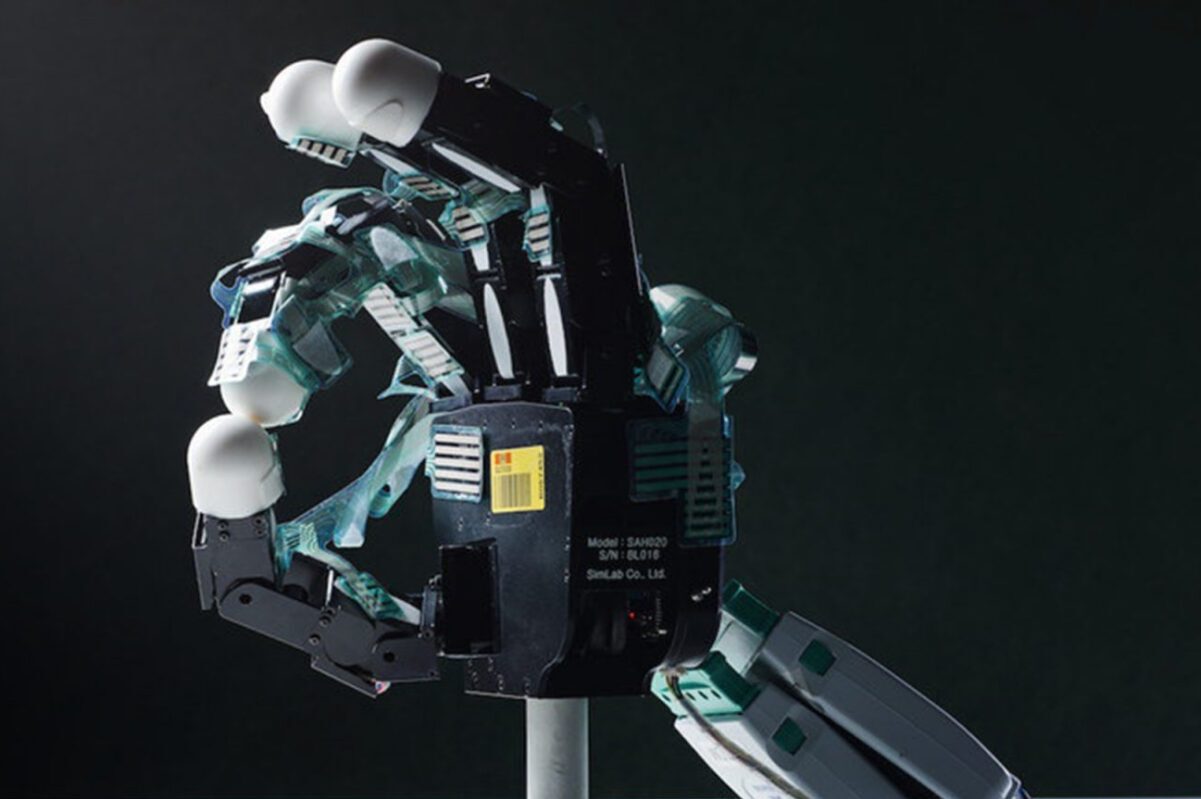

Recently, there have been some amazing advances in deep learning for robots. Deep learning allows robots to learn from data and recognize patterns. This is important for social interaction, as it enables robots to better understand the nonverbal signals we send each other.

This advancement will allow robots to interact with us more naturally. For example, they will be able to understand and respond to our gestures, body movements, and tone of voice. This will make it easier for us to communicate with them, which could eventually lead to true human-robot interaction.

What Are the Recent Advances in Deep Learning for Robots?

Deep learning enables computers to learn from data without requiring explicit programming. It involves passing data through a series of layers, extracting increasingly abstract features.

Recently, there have been several advances in deep learning for robots. One such advance is convolutional neural networks, which can learn the features of images. This has led to the development of robots that recognize faces and emotions.

Another advance is the use of recurrent neural networks, which can learn data sequences. This has led to the development of robots that can understand natural language and dialogue.

How Can Deep Learning Help Robots Better Understand Nonverbal Social Behavior?

Deep learning can help robots understand nonverbal social behavior by teaching them to recognize patterns in human interaction. By observing how people interact, robots can learn to identify facial expressions, body language, and tone of voice. This will allow them to better understand the emotions and intentions of the people around them.

In addition, deep learning can help robots to predict human behavior. By understanding the habits and preferences of humans, robots can better anticipate their actions. This will help them to interact with humans and to fulfill our needs.

How Might These Advances Be Used in Future Applications?

One possibility is that robots equipped with this type of deep learning could be used in assistive or therapeutic roles. For example, they could be used to help autistic children or adults to interact and communicate more effectively.

These robots could be used as part of a social coaching system, providing real-time feedback to people on their nonverbal behavior. This could be useful for people with social anxiety.

Finally, these robots could help us better understand human social behavior. For example, they could be used to study how people with different mental disorders interact with others.

Conclusion

In sum, the findings of this study suggest that by using deep learning, robots may be able to better understand and replicate nonverbal social behaviors, providing a new area of research for those studying human-robot interaction. However, more work and research are needed to understand the complexities of human social behavior before these advances can be fully realized.